Big data is the term that defines the collection of a huge volume of data. The jet engines, stock interactions, and sites in social media are examples of big data analytics. Big data is essential in all aspects of the extraction process. Big data can be described as large datasets that are complex to function in conventional software applications. Our experts guide in implementing big data projects for masters students.

Why do we use big data?

In companies, big data is used to enhance marketing movements and performances. In addition, the companies deploy the big data fitting together with machine learning for the process such as predictive modeling, preparation for machines, and novel analytics applications. The big data is not parallel to any other data volume. The above-mentioned are the significant insights based on big data.

How is big data collected?

Big data is collected through the ways such as social media, dependability cards, analytics, maps, transactional data, etc.

Where is big data stored?

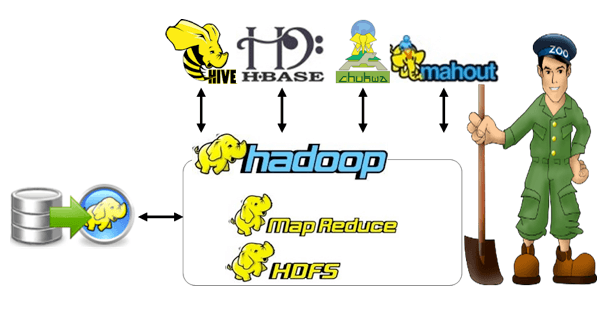

Hadoop distributed file system is used as the data storage system for Hadoop data and it collects the data in clusters which are created by the smaller blocks. The small blocks are used to store in the physical storage units (for example the internal disk drive). There are four types of storage architectures in big data such as

- Object-based storage

- The data is stored in a flexible container

- It permits the peer-to-peer file transfer

- The process is done through the hash tables

- All solid state drive

- It is the traditional featured array

- The implementation is similar to the JBCD

- Network attached storage

- It has its own distributed file system

- The competence of scaling through the capacity

- Distributed nodes

- It is a low-cost commodity and directly attached to the computer server

Top 5 latest big data projects for masters students

- Skip gram approach in distributed representation

- Scale big HDT semantic data compression and MapReduce techniques

- Collaborative spam detection and big data paradigm preservation

- Various results are calculated by the applicable standards of healthcare

- Multivariable time series is used to calculate the missing data

What problems you might face in doing big data projects?

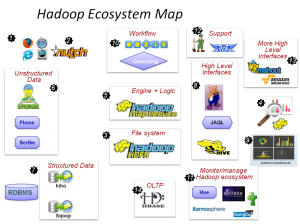

Big data is deployed in a wide range of activities that take place in industries. In general, numerous research big data projects using hadoop. On the other hand, big data challenges are also obtainable in this field. Here, we have listed some of the research challenges in big data such as

- Huge datasets

- Security and privacy

- Timing disputes

- Lack of required tools

- Inadequate monitoring solutions

- High-level scripting is essential

In the following, our research experts have listed some of the research solutions which are beneficial for the research scholars to precede their research in big data. We assist in programming big data projects for masters students.

- To acquire the finest results and efficacy, the machine learning approaches are used

- The selection of hardware and software tools plays a vital role

- Data verification is essential to avoid the fake

What are the technologies you’ll need to use in big data analytics projects?

- SAS

- PHP

- Open source databases

- Javascriptpt

- Cloud solutions

- AWS

- Azure

- R

- Python

- Tableau

- C++

Big data tools and methodologies

- Open refine

- Apache storm

- Cloudera

- MongoDB

- Cassandra

- Open refine

- It is one of the authoritative big data tools that is deployed to transform the data into various formats and in the process of data cleaning

- The researcher can discover massive datasets by using this open refine tool

- The notable features in open refine are highlighted below

- It is used to develop the expression language for the data operations

- It deployed to import data in various formats

- The data sets are spread out for different web services

- It is used to control the cells with various values of data and it accomplishes the cell transformation

- Apache storm

- It is based on the real-time distribution of tools for the data stream process

- It is used to write in various programming languages such as Java, Clojure, etc.

- The significant features are noted down

- It assists the direct acrylic graph topology (DAG)

- It is functional in the Java virtual machine (JVM)

- The real-time data processing

- It can develop the process in the excess of million jobs on the node

- The storm topology takes place up to the user shuts down the process

- Cloudera

- It is one of the speediest and most secured big data technologies

- This is the ascendable section that permits to grab of data from various environment

- It is established by the open source Apache Hadoop distribution

- The best characteristics of Cloudera are enlisted below

- It is used to rotate and terminate the data clusters

- It can cultivate the training data models

- It provides real-time insights into the process of data detection and data monitoring

- The supportive services in Cloudera are highlighted below

- Cloudera essentials

- Cloudera enterprise data hub

- Cloudera data science and engineering

- Cloudera analytic database

- Cloudera operation database

- MongoDB

- It is based on the open source NoSQL and document-oriented database

- It is used as a warehouse for the massive volume of data

- The documents and collections are used in place of rows and columns

- It is beneficial for real-time data and improves the decision-making skills

- Notable features in MongoDB are highlighted below

- It is used to develop the quality of searches such as range quires, name of the field, systematic expressions, etc.

- It serves the finest load balancing techniques to separate the data through the MongoDB

- Cassandra

- Cassandra is one of the main focuses in the distributed database management systems and big data technologies

- It is used to control huge volumes of data with various servers

- It is an ideal one for the process of structured data sets

- NoSQL solution from Facebook is the initial establishment of Cassandra

- It is followed by various companies such as Twitter, Cisco, Netflix, etc.

- The features of Cassandra include the following

- Data is stimulated across the various data centers and nodes thus the damage in a data center or a node is simultaneously it is replaced by another data center and the node

- It is used to offer simple query language, therefore it leads to the hassle-free process in the transition

Our research team updates their knowledge to produce the finest big data projects for masters students. We have 15+ years of experience in this research field. In this period, we accomplished enormous research requirements in the field of big data analytics. Consequently, we have 5000+ happy customers all over the world. Therefore, we can provide 100% worth and plagiarism-free research work for the master’s students.