Projects Using Hadoop

Projects Using Hadoop is the site of success to get the utmost overwhelming with the fabulous victory. We start to work on our Projects Using Hadoop Service with the great thought of develop national and international level students and research philosophers knowledge in Hadoop. Our spectacular knowledgeable professionals are eager to tutor you to brighten up your skill and profile. Today, we are serving our dedicative service by 50+ branches in all over the world. On these days hadoop big data has a great scope to enlarge their real time applications like video sentimental analysis, content sentiment analysis, twitter data sentiment analysis based on querying etc. Would you aspire to start your achievement’s work for your tomorrow success? Call us. We congratulate you for your marvelous and huge success in your future.

Projects Using Hadoop

Projects Using Hadoop offers the highly challengeable research environment to prove your knowledge ability and high scientific capability. We are experts in implementation of apache software foundation projects with the main focus of give the best for students and research fellows to integrate, deploy and work massive range of structure and unstructured data by Hadoop. Our each development projects are have an individual quality and standard compare than other projects. Believe us……We provide unstoppable solutions for your academic projects using Hadoop.

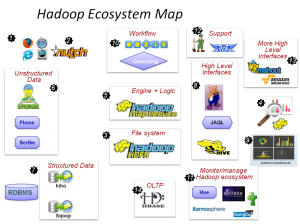

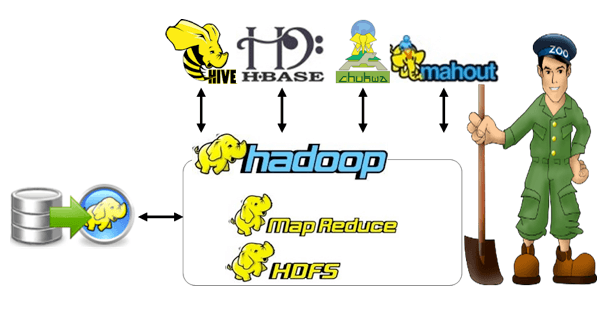

Hadoop Framework:

Data Governance and LifeCycle

- Atlas

- Falcon

Data Wokflow

- WebHDFS

- NFS

- Kafka

- Flume

- Sqoop

Security (Administration, Authentication, Authorization, Protection and Auditing Data)

- Atlas

- Knox

- Ranger

- HDFS Encryption

Operations (Monitoring, Managing and Provisioning)

- Zookeeper

- Cloudbreak

- Ambari

Scheduling

- Oozie

Data Access

- Batch (MapReduce)

- Script (Pig)

- SQL (Hive)

- NoSQL (HBase and Accumulo)

- Stream (Storm)

- Search (Solr)

- In-Mem (Spark)

- Others (BigSQL and HAWQ)

Data Management

- HDFS

Realtime CyberSecurity Engine

- Apache Metron

Hadoop Bigdata Paradigm Integrated:

- Internet of Things (Consumer devices, Industrial Internet and Connected Business)

- Artificial Intelligence (Autonomy, Smart Devices and Prescriptive Analytics)

- Cloud Computing (PaaS/SaaS applications and Ephemeral Usecases)

- Streaming Data (Realtime applications and retail applications)

- Sensor based Networks Data Processing

- Image processing (Private cloud service offloading)

- Bioinformatics Applications

- Data mining (Earth science data diagnosis)

- Social Media (YouTube, FaceBook and Twitter)

We strong in the following areas:

- All major concepts on Hadoop Framework

- Hadoop based Eclipse Plugins used in Visual programming environment

- 10+ years of experience in MongoDB, Cassandra and Hive

- MapReduce programming with supports proprietary tools to develop complex jobs

- It also supports in C++ and JAVA and expertise JVM on various programming languages

- Developed 500+ projects using Hadoop based on Integrated Domains [Above mentioned research areas]

- Experience on HDFS in Heterogeneous Environment

- Hadoop Deployment on cloud with setting experience on both single cluster and multiple clusters

- Good experience in hadoop integration with Nagios and Ganglia

Now, we work on the following topics:

- Effective Memory Management and Load Balancing Approach in Cloud Environment

- Empirical Analysis Combined Filtering Recommendation Algorithm Based on Item Using Enhanced MapReduce Paradigm

- Novel Classifier Approach in Distributed Framework to Perform Big Data Analysis Optimization

- Shiny and R for Analyze Water Resources Data by Implementing Cloud Based Tools

- Incremental K-Means Clustering Technique for Parallel Forecasting Approach

- Two Level Storage In-Memory Based Big Data Analytics on Private Cloud

- Preserve Greedy Based Privacy Using NoSQL in Data Mining

- Segregated Satellite Images for Natural Calamities Prediction

- MapReduce Based Innovative Technique for Global Navigation Satellite System (GNSS) Receivers to Extract Auxiliary Information

- Scratch Large Scale Distributed Data Science Using Apache Spark 2.0

- HDFS (Hadoop Distributed File System) Based Cyclic Redundancy Check (CRC) for Efficient Data Integrity Verification in Cloud Storage

- Design Big Data Based Collaborative Production Management

- User Generated Feedback on MapReduce for Semantic Based Service Recommendation Approach

- Survey Query Driven Visual Exploration Using GPU Accelerated Web GIS

- Hybrid Framework on Spark for Multi Paradigm Data Analytical Processing