What are big data analytics? Big data analytics is the combination process of technological requirements and techniques which are non-traditional and that include processes such as data collection, categorization, etc. In general, large datasets are defined as a massive amount of datasets collected using typical tooling methods. A PhD research proposal in big data analytics mainly focuses on the big data concepts.

What is a PhD research proposal?

PhD research proposal is the description of an innovative and novel research idea and it is considered the significant framework of the research project. To be specific, it is the first and foremost part of research that defines a question about the existing one and depicts the appropriate answer for the query.. The PhD research proposals in big data analytics are the work in which the research idea is noticeably enlightened using how it is going to overcome drawbacks in the existing work.

What is PhD big data analytics doing?

Big data analytics is beneficial for protection functionalities and decision-making is the foremost advantage of big data analytics, etc. Big data analytics is used for the functions of extracting the perceptions such as developments in the market, fondness of customers, and hidden configurations.

Big data analytics process flow

The process of data analytics has four types that are used to grab the attention of the audience and become accustomed to the requirements with editable options. The types such as

- Information

- Data

- Decision

- Insight

The relevant process and appropriate steps are used for the implementation of all the above big data analytics types. In addition, they are readily explained and demonstrated to you once when you get in touch with us. Also, more clear explanations about these big data analytics types are available on our website. We provide the most confidential research support with in-depth research and analysis, foremost innovations, etc. Hereby, we have listed the process of data integration in the following.

Big data integration

Data integration is the cluster of various developments and their functions are used to accumulate and rescue data from disparate sources into meaningful and valuable information. Data integration is accustomed to providing reliable data from various resources.

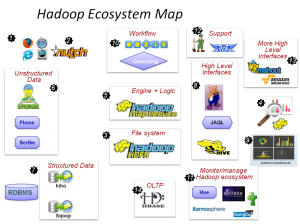

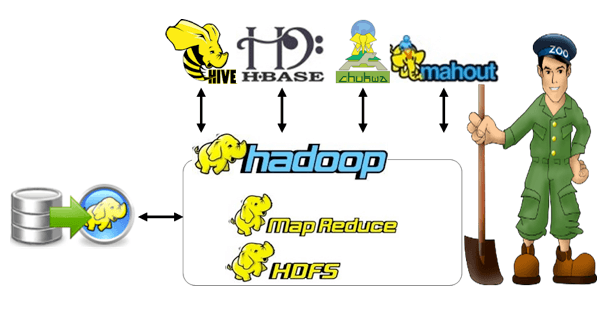

To regulate the structured and unstructured data big data integration is deployed and it runs into the performance and scalability at a huge level as the result of the process. Every component in the big data ecosystem that includes NoSQL databases, Hadoop, analytics, etc. has some specific methods for the process of transformation, loading, and extraction of data.

Traditional data integration

ETL stands for extract transform and load which means it is based on the process consume data, extracting the consumed data, and then loading data to the warehouse. The data integration process takes place through mixed application environments when the process gets data from the source to the destination.

In these ways, big data analytics systems are classified efficiently. The research scholars will gain more insights into the process of big data integration if you take look at our successfully delivered projects in this field wherein we have used many different methods to produce the best research work. Get in touch with us for any kind of assistance regarding big data analytics. Now it’s time to discuss about the various stages of data preparation in big data analytics.

Big Data Analytics Data Preparation Stage

The data preparation stage is considered the finest part of big data analytics in which the quality of big data is developed. This improvement happened through data preprocessing and the process of integration. Big data analytics has six main functions such as

- Data Preparation

- Model

- Data evaluation

- Deployment

- Monitor

- Data collection

Many substantial operations take place in data preprocessing for your reference we have enlisted some of the data preprocessing operations

- Feature extraction

- Elimination of anomalies

- Outliers detection

- Noise reduction

- Feature extraction

- The extraction process requires a big data system with a nonstop data stream and unstructured data

- Elimination of anomalies

- The main intention of anomaly detection is to develop the quality of big datasets

- This process takes place due to the presence of unwanted, irregular, unusual data values in big datasets

- Outliers detection

- The outliers detection is used to create the high-quality datasets

- Various steps and methods are deployed to extract and delete the outliers

- Because the appearance of outliers leads to degrading the quality of big datasets

- Noise reduction

- This process is used to extract the inappropriate noise and data

- The collection of big data is based on the sensor data in IoT and big data projects (social media data streams)

Big data analytics is a developing network every day with future scope for future expansion. Therefore, as a researcher, you need to keep yourself highly updated in this field. In this regard, our developers and research experts will provide you with all information from relevant and updated sources. Also, our formatting and editing team of ours will help you in advance analytics. Let us now talk about the significant algorithm implementation process in big data.

Algorithm Implementation

Through Mapreduce, record linkage is one of the fundamental approaches which is liable and drops the performance in the process of load imbalance through the block sizes that are slanted. The algorithms such as

- Record linkage with specific constraints

- Temporal record linkage

- Incremental record linkage

- Linking text snippets to structured data

- Meta blocking pruning matching

- Record linkage with specific constraints

- The values of the false attribute are recognized and they can be segregated from the correct values

- Due to the amalgamation of data fusion and record linkage

- Temporal record linkage

- It is used to classify the attribute values in out of data

- It permits the linkage through temporal records

- Incremental record linkage

- A form of general increment is recognized for the performance

- Linking text snippets to structured data

- It is used to acquire the structured data through linkage techniques

- Meta blocking pruning matching

- To list out the inadequacy in large-scale heterogeneous data

- It is used to develop the efficiency of blocking

Explanations and descriptions based on these algorithms are available on our website. With references from benchmark sources and updated information from reputed top journals, we will make your work of PhD research proposal in big data analytics much easier.

So far we have discussed the significance of big data analytics. Let us now discuss the details of the research proposal.

What is included in the research proposal?

A PhD research proposal is known as a plan for implementation and that includes a summary consisting of 350 number of word. As well as, it encompasses so many technical facts and supporting statements of the research. In general, research proposals are an accumulation of postulation, research questions, methodologies, etc.

How detailed should a PhD proposal be?

Firstly, the research proposal is associated with a key construction that provides insights and thought processes in a typical manner. On the other hand, it uniquely gives an outline to the idea of the proposition as proposals are accommodated with proper guidance and it should be proposed within 2000 words. It’s not a thesis to give a detailed summary of the research work. Thus the researchers should deliver the content to the point. Significantly, it has no limit on the number of words and pages but the researcher should constrain this by reading the reader’s mind.

Hereby, we have enlisted the structure of PhD research proposal in big data analytics for your reference.

How do you prepare a research proposal?

- Title

- Background

- Research questions

- Research methodology

- e schedule and work plan

- Bibliography

- Title

- A proposal title is the appropriate clue of the research work that is noticed by the reader. The researcher is held with the responsibility to frame the different and eye catchy title.

- Background

- It contains the restrains in previous work caught by the researcher

- Research questions

- The research gaps are formulated as research questions

- Research methodology

- Mentioning the techniques used and the procedures followed in the research

- Framework of the theoretical resources

- Benefits and limits of the work

- Time schedule and work plan

- Framework for development, implementation, write-ups, etc.

- There are two different types of studies such as part-time (within 6 years) and full-time (within 3 years)

- Bibliography

- Quote the materials and resources used for the research

The above mentioned are the fundamental and characteristic segments of the PhD research proposal. Whenever constructing a research proposal attempt to give a shot of something new to every section in the research.

As a result, big data is one of the significant fields of study and area of research that has the potential to make the career extraordinarily interesting and successful. In general, it is the key approach taken up by a maximum number of researchers, organizations, and individuals. It is in the field of big data analytics research that our experts and developers have been present for the past two decades thus, they are well experienced. So, reach us to get complete support on PhD research proposal in big data analytics.