Projects on Hadoop

Projects on Hadoop offer marvellous way to begin you dream of pilgrimage with the big goal and accomplish it with the big achievements. Our ultimate knowledgeable professionals are PhD rank holders, gold medallists, and university rank holders who assist you with the high standardized training and guidance. We give the special and excellent of training for students to make them eligible to compute with design methodologies, uptrend and rapidly growing cutting edge technologies and latest IT trends. Our experts also offer step by step guidance in the phase of writing thesis/documentation and manuscripts. If you have any quires in Hadoop, you can immediately send your queries via mail.

Projects on Hadoop

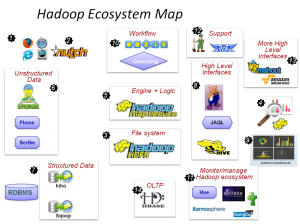

Projects on Hadoop give high-tech scientific research world for students and research scholars to start their scientific journey with the miraculous ambition. We are completed 10000+ Projects on Hadoop using uptrend research concepts including Distributed big data processing, HDFS file storage process optimization, Hadoop based fault diagnosis, In-Memory parallel processing, Hadoop based protein motif sequence clustering, HDFS data encryption implementation etc.

Now, let us see some ways to get data into Hadoop,

- SAS/Access or SAS Data Loader used for Hadoop [Third-Party Vendor Connectors]

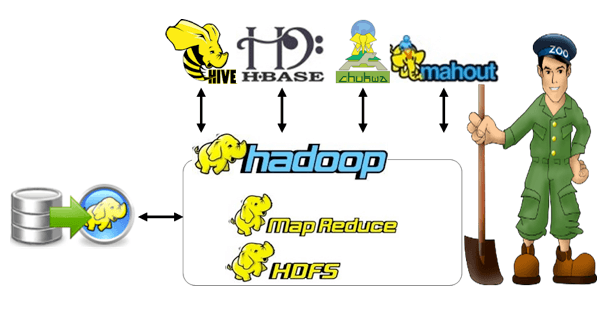

- Structured Data import from Relational database to Hive, HBase and HDFS using SQOOP and it is also mine data from Hadoop and export to data warehouses and relational databases

- Load data continuously through Flume from logs into Hadoop

- Use Java commands for load files to the system

- Copy/write files on Mount HDFS file system

- Design Cron job for new files to scan a directory and import it into HDFS. This can be useful for email downloading at continuous intervals

Over Hadoop Framework, HDFS play a critical role which act as a storage managers. Depending on the requirements, we can store huge amount of data in HDFS/HBase. In this article, we explain about the HDFS schema.

- We can store and process structured data such as RDBMS data, unstructured data such as emails/blog posts, videos, images, word files, text, PDFs and semi structured data such as logfiles and XML documents.

- Goals of HDFS: Fault Detection and Recovery, Huge Datasets Organization and Processing, and Hardware at data

- Directory Structure of HDFS files: /user/<username>, /etl, /tmp, /data, /app, /metadata,

- Strategies involving in HDFS Schema: Partitioning, Bucketing, and Denormalizing.

- HDFS NameNode strategies: Replication, Balancing and Heartbeats

- Future of HDFS: Block management move to DataNodes

- Image edits and stored within the HDFS

- Support for Heterogeneous Storage: Archival Storage and SSD

- Netty: Thread management and Better connection

- HDFS contains the following Sources of data:

-Sensor Data: Barcode reader data

-Power Grid Data: Search engine data and transport data

-Sentiment Data: Comments or shares from news or blogposts

-Stock Exchange Data: Customers buy and sell data

-Black Box Data: Airplanes data, helicopters data and jets data

-Social Media Data: Twitter data, Google+ data, FaceBook data

List of Topics in Projects on Hadoop:

- Load Evaluation Based on Formula for Real Time Applications in Distributed Streaming Paradigm

- Time Constraint Data Analysis in Open Stack Cloud Computing Framework by Elastic Spatial Query Processing

- Mitigating Memory Pressure for Service in Service Oriented Data Processing System

- Big Data Processing Industrial Framework for Internet of Vehicles on Car Stream Suing Hadoop

- Discourse Relation for Twitter Sentiment Detection Based on Collision Theory

- Adaptive Fault Diagnosis and Detection Using Distributed Computing Techniques with Parsimonious Gaussian Mixture Models

- Efficient and Scalable In-Memory Data Store and MapReduce Paradigm Construction of Suffix Array

- Tweet Classification and Segmentation Using KNN Scheme for Rumor Identification

- Data Driven Science to Build Infrastructure of Cost Effective Balanced HPC Using Theoretical Approach

- Large Scale Data Processing Generalization for Course Grained parallelism in One MapReduce Job

- Material Related Physical Paradigms Using Real Time Data Analysis and Acquisition Framework

- Autonomous Application and Resource Management Based Market in Private Clouds

- Resource Provisioning in Public Clouds for Deadlines Task Batch Based Workflows

- Efficient Resource Allocation Using Harnessing Parallel Execution Paths in Multi-Stage Big Data Systems

- Distributed Data Mining Algorithm for Achieving Consumable Big Data Analytics